Digital Twin of Human Lungs: Towards Real-Time Simulation and Registration of Soft Organs

ICCB 2025

LMS, École Polytechnique, Paris, France

September 10, 2025

Idiopathic Pulmonary Fibrosis

Objectives

- Improved understanding of the mechanically-driven physiological mechanisms

- In silico prognostics

- Transfer tools to the clinic

- Patient-specific digital twins

Requirements

- Mechanical model of the lung (Patte et al., 2022; Peyraut & Genet, 2024)

- Uncertainty quantification (Laville et al., 2023; Peyraut & Genet, 2025)

- Real-time estimation of the parameters

Lung poro-mechanics (Patte et al., 2022).

\[ \definecolor{Violet}{RGB}{162, 32, 185} \definecolor{Teal}{RGB}{0, 103, 127} \definecolor{Blue}{RGB}{58, 70, 245} \definecolor{Green}{RGB}{0,103,127} \definecolor{LGreen}{RGB}{62,128,102} \definecolor{red}{RGB}{206,0,55} \]

Weak formulation :

\[ \begin{cases} \int_{\Omega} \boldsymbol{\Sigma} : d_{\boldsymbol{u}}\boldsymbol{E}\left( \boldsymbol{u^*} \right) ~\mathrm{d}\Omega= W_{\text{ext}}\left(\boldsymbol{u},\boldsymbol{u^*}\right) ~\forall \boldsymbol{u^*} \in H^1_0\left(\Omega \right) \\[4mm] \int_{\Omega} \left(pf + \frac{\partial \Psi \left(\boldsymbol{u},\Phi_f\right)}{\partial \Phi_s}\right) \Phi_f^* ~\mathrm{d}\Omega= 0 ~\forall \Phi_f^* \end{cases} \label{eq:MechPb_lung} \]

Kinematics:

- \(\boldsymbol{F} = \boldsymbol{1} + \boldsymbol{\nabla}\boldsymbol{u}\) - deformation gradient

- \(\boldsymbol{C} = \boldsymbol{F}^T \cdot \boldsymbol{F}\) - right Cauchy-Green tensor

- \(\boldsymbol{E} = \frac{1}{2}\left(\boldsymbol{C} -\boldsymbol{1} \right)\) - Green-Lagrange tensor

- \(I_1 = \text{tr}\left(\boldsymbol{C} \right)\) - first invariant of \(\boldsymbol{C}\)

- \(I_2 = \frac{1}{2} \left(\text{tr}\left(\boldsymbol{C} \right)^2 - \text{tr}\left( \boldsymbol{C}^2 \right) \right)\)

Behaviour

- \(\boldsymbol{\Sigma} = \frac{\partial \Psi_s + \Psi_f}{\partial \boldsymbol{E}} = \frac{\partial \Psi_s }{\partial \boldsymbol{E}} + p_f J \boldsymbol{C}^{-1}\) - The second Piola-Kirchhoff stress tensor

- \(p_f = -\frac{\partial \Psi_s}{\partial \Phi_s}\) - fluid pressure

- \(\Psi_s\left(\boldsymbol{E}, \Phi_s \right) = W_{\text{skel}} + W_{\text{bulk}}\) \[\begin{cases} W_{\text{skel}} = \beta_1\left(I_1 - \text{tr}\left(I_d\right) -2 \text{ln}\left(\mathrm{det}\left(\boldsymbol{F} \right)\right)\right) + \beta_2 \left(I_2 -3 -4\text{ln}\left(\mathrm{det}\left(\boldsymbol{F} \right)\right)\right) + \alpha \left( e^{\delta\left(\mathrm{det}\left(\boldsymbol{F} \right)^2-1-2\text{ln}\left(\mathrm{det}\left(\boldsymbol{F} \right)\right) \right)} -1\right)\\ W_{\text{bulk}} = \kappa \left( \frac{\Phi_s}{1-\Phi_{f0}} -1 -\text{ln}\left( \frac{\Phi_s}{1-\Phi_{f0}}\right) \right) \end{cases}\]

- \(\Phi_s\) current volume fraction of solid pulled back on the reference configurations

Simpler mechanical problem - surrogate modelling

- Parametrised (patient-specific) mechanical problem

\[ \boldsymbol{u} = \mathop{\mathrm{arg\,min}}_{H^1\left(\Omega \right)} \int_{\Omega} \Psi\left( \boldsymbol{E}\left(\boldsymbol{u}\left(\textcolor{Blue}{\boldsymbol{x}}, \textcolor{LGreen}{\left\{\mu_i\right\}_{i \in \mathopen{~[\!\![~}1, \beta \mathclose{~]\!\!]}}}\right)\right) \right) ~\mathrm{d}\Omega- W_{\text{ext}}\left(\textcolor{Blue}{\boldsymbol{x}}, \textcolor{LGreen}{\left\{\mu_i\right\}_{i \in \mathopen{~[\!\![~}1, \beta \mathclose{~]\!\!]}}}\right) \]

Kinematics:

- \(\boldsymbol{F} = \boldsymbol{1} + \boldsymbol{\nabla}\boldsymbol{u}\) - deformation gradient

- \(\boldsymbol{C} = \boldsymbol{F}^T \cdot \boldsymbol{F}\) - right Cauchy-Green tensor

- \(\boldsymbol{E} = \frac{1}{2}\left(\boldsymbol{C} -\boldsymbol{1} \right)\) - Green-Lagrange tensor

- \(I_1 = \text{tr}\left(\boldsymbol{C} \right)\) - first invariant of \(\boldsymbol{C}\)

Behaviour - Saint Venant-Kirchhoff

\(\Psi = \frac{\lambda}{2} \text{tr}\left(\boldsymbol{E}\right)^2 + \mu \boldsymbol{E}:\boldsymbol{E}\)

- Unstable under compression

- \(\nu = 0.49\) for quasi-incompressibility

- Soft tissues are often modelled as incompressible materials

Reduced-order model (ROM)

- Build a surrogate model

- Parametrised solution \(\left(\textcolor{Blue}{\boldsymbol{x}}, \textcolor{LGreen}{\left\{\mu_i\right\}_{i \in \mathopen{~[\!\![~}1, \beta \mathclose{~]\!\!]}}}\right)\)

- No computations online

- On the fly evaluation of the model

- Real-time parameters estimation

Outline

|

|

II - Methods

|

|

|

|

Interpolation of the solution

- FEM finite admissible space

\[ \mathcal{U}_h = \left\{\boldsymbol{u}_h \; | \; \boldsymbol{u}_h \in \text{Span}\left( \left\{ N_i^{\Omega}\left(\boldsymbol{x} \right)\right\}_{i \in \mathopen{~[\!\![~}1,N\mathclose{~]\!\!]}} \right)^d \text{, } \boldsymbol{u}_h = \boldsymbol{u}_d \text{ on }\partial \Omega_d \right\} \]

- Interpretabilty

- Kronecker property

- Easy to prescribe Dirichlet boundary conditions

- Mesh adaptation not directly embedded in the method

- Fully connected Neural Networks (e.g., PINNS)

\[ \boldsymbol{u} \left(x_{0,0,0} \right) = \sum\limits_{i = 0}^C \sum\limits_{j = 0}^{N_i} \sigma \left( \sum\limits_{k = 0}^{M_{i,j}} b_{i,j}+\omega_{i,j,k}~ x_{i,j,k} \right) \]

- All interpolation parameters are trainable

- Benefits from ML developments

- Not easily interpretable

- Difficult to prescribe Dirichlet boundary conditions

- HiDeNN framework (Liu et al., 2023; Park et al., 2023; Zhang et al., 2021)

- Best of both worlds

- Reproducing FEM interpolation with constrained sparse neural networks

- \(N_i^{\Omega}\) are SNN with constrained weights and biases

- Fully interpretable parameters

- Continuous interpolated field that can be automatically differentiated

- Runs on GPUs

Solving the mechanical problem (Škardová et al., 2025).

Solving the mechanical problems amounts to finding the continuous displacement field minimising the potential energy \[E_p\left(\boldsymbol{u}\right) = \frac{1}{2} \int_{\Omega}\boldsymbol{E} : \mathbb{C} : \boldsymbol{E} ~\mathrm{d}\Omega- \int_{\partial \Omega_N}\boldsymbol{F}\cdot \boldsymbol{u} ~\mathrm{d}A- \int_{\Omega}\boldsymbol{f}\cdot\boldsymbol{u}~\mathrm{d}\Omega. \]

In practice

- Compute the loss \(\mathcal{L} := E_p\)

- Find the parameters minimising the loss

- Rely on state-of-the-art optimizers (ADAM, LBFGS, etc.)

Degrees of freedom (Dofs)

- Nodal values (standard FEM Dofs)

- Nodal coordinates (Mesh adaptation)

- hr-adaptivity

h-adaptivity: red-green refinement

technical details:

| (Škardová et al., 2025) | |

|---|---|

| Talk | 9am - 9:40am |

Reduced-order modelling (ROM)

Low-rank approximation of the solution to avoid the curse of dimensionality with \(\beta\) parameters

Full-order discretised model

- \(N\) spatial shape functions

- Span a finite spatial space of dimension \(N\)

- Requires computing \(N\times \beta\) associated parametric functions

Reduced-order model

- \(m \ll N\) spatial modes

- Similar to global shape functions

- Span a smaller space

- Galerkin projection

Finding the reduced-order basis

- Linear Normal Modes (eigenmodes of the structure) (Hansteen & Bell, 1979)

- Do not account for the loading and behaviour specifics

- Proper Orthogonal Decomposition (Chatterjee, 2000; Radermacher & Reese, 2013)

- Require wise selection of the snapshots and costly computations

- Reduced basis method (Maday & Rønquist, 2002) with EIM (Barrault et al., 2004)

- Rely on prior expensive computations

Proper Generalised Decomposition (PGD)

- Proper Generalised Decomposition (PGD) : (Chinesta et al., 2011; Ladeveze, 1985)

Tensor Decomposition

- \(\textcolor{LGreen}{\textcolor{LGreen}{\left\{\mu_i\right\}_{i \in \mathopen{~[\!\![~}1, \beta \mathclose{~]\!\!]}}}}\) parameters (material, shape, loading, etc.)

- Curse of dimensionality

\[ \textcolor{VioletLMS_2}{\boldsymbol{u}}\left(\textcolor{Blue}{\boldsymbol{x}}, \textcolor{LGreen}{\left\{\mu_i\right\}_{i \in \mathopen{~[\!\![~}1, \beta \mathclose{~]\!\!]}}}\right)\]

Discretised problem

- \(\textcolor{VioletLMS_2}{N\times\prod\limits_{j=1}^{~\beta} N_{\mu}^j}\) unknowns

- e.g. \(\textcolor{VioletLMS_2}{10000 \times 1000^2 = 10^{10}}\)

Proper Generalised Decomposition (PGD)

- Proper Generalised Decomposition (PGD) : (Chinesta et al., 2011; Ladeveze, 1985)

Tensor Decomposition

- \(\textcolor{LGreen}{\textcolor{LGreen}{\left\{\mu_i\right\}_{i \in \mathopen{~[\!\![~}1, \beta \mathclose{~]\!\!]}}}}\) parameters (material, shape, loading, etc.)

- Curse of dimensionality

\[ \textcolor{VioletLMS_2}{\boldsymbol{u}}\left(\textcolor{Blue}{\boldsymbol{x}}, \textcolor{LGreen}{\left\{\mu_i\right\}_{i \in \mathopen{~[\!\![~}1, \beta \mathclose{~]\!\!]}}}\right) = \sum\limits_{i=1}^m \textcolor{Blue}{\overline{\boldsymbol{u}}_i(\boldsymbol{x})} ~\textcolor{LGreen}{\prod_{j=1}^{\beta}\lambda_i^j(\mu^j)}\]

- \(\textcolor{Blue}{\overline{\boldsymbol{u}}_i(\boldsymbol{x})}\) space modes

- \(\textcolor{LGreen}{\lambda_i^j(\mu^j)}\) parameter modes

Discretised problem

- From \(\textcolor{VioletLMS_2}{N\times\prod\limits_{j=1}^{~\beta} N_{\mu}^j}\) unknowns to \(\textcolor{Blue}{m\times\left(N + \sum\limits_{j=1}^{\beta} N_{\mu}^j\right)}\)

- e.g. \(\textcolor{VioletLMS_2}{10000 \times 1000^2 = 10^{10}} \gg \textcolor{Blue}{ 5\left( 10 000+ 2\times 1000 \right) = 6 \times 10^4}\)

- Finding the tensor decomposition by minimising the energy \[ \left(\left\{\overline{\boldsymbol{u}}_i \right\}_{i\in \mathopen{~[\!\![~}1,m\mathclose{~]\!\!]}},\left\{\lambda_i^j \right\}_{ \begin{cases} i\in \mathopen{~[\!\![~}1,m\mathclose{~]\!\!]}\\ j\in \mathopen{~[\!\![~}1,\beta \mathclose{~]\!\!]} \end{cases} } \right) = \mathop{\mathrm{arg\,min}}_{ \begin{cases} \left(\overline{\boldsymbol{u}}_1, \left\{\overline{\boldsymbol{u}}_i \right\} \right) & \in \mathcal{U}\times \mathcal{U}_0 \\ \left\{\left\{\lambda_i^j \right\}\right\} & \in \left( \bigtimes_{j=1}^{~\beta} \mathcal{L}_2\left(\mathcal{B}_j\right) \right)^{m-1} \end{cases}} ~\underbrace{\int_{\mathcal{B}}\left[E_p\left(\boldsymbol{u}\left(\boldsymbol{x},\left\{\mu_i\right\}_{i \in \mathopen{~[\!\![~}1, \beta \mathclose{~]\!\!]}}\right), \mathbb{C}, \boldsymbol{F}, \boldsymbol{f} \right) \right]\mathrm{d}\beta}_{\mathcal{L}} \label{eq:min_problem} \]

Proper Generalised Decomposition (PGD)

- Building the low-rank tensor decomposition greedily (Ladeveze, 1985; Nouy, 2010)

\[ \boldsymbol{u}\left(\boldsymbol{x}, \left\{\mu_i\right\}_{i \in \mathopen{~[\!\![~}1, \beta \mathclose{~]\!\!]}}\right) = \overline{\boldsymbol{u}}(\boldsymbol{x}) ~\prod_{j=1}^{\beta}\lambda^j(\mu^j) \]

\[ \boldsymbol{u}\left(\boldsymbol{x}, \left\{\mu_i\right\}_{i \in \mathopen{~[\!\![~}1, \beta \mathclose{~]\!\!]}}\right) = \textcolor{red}{\sum\limits_{i=1}^{2}} \overline{\boldsymbol{u}}_{\textcolor{red}{i}}(\boldsymbol{x}) ~\prod_{j=1}^{\beta}\lambda_{\textcolor{red}{i}}^j(\mu^j) \]

\[ \boldsymbol{u}\left(\boldsymbol{x}, \left\{\mu_i\right\}_{i \in \mathopen{~[\!\![~}1, \beta \mathclose{~]\!\!]}}\right) = \sum\limits_{i=1}^{\textcolor{red}{m}} \overline{\boldsymbol{u}}_i(\boldsymbol{x}) ~\prod_{j=1}^{\beta}\lambda_i^j(\mu^j) \]

Greedy algorithm

- Start with a single mode

- Minimise the loss until stagnation

- Add a new mode

- If loss decreases, continue training

- Else stop and return the converged model

The PGD is

- An a priori ROM techniques

- Building the model from scratch on the fly

- No full-order computations

- Reduced-order basis tailored to the specifics of the problem (Daby-Seesaram et al., 2023)

Note

- Training of the full tensor decomposition, not only the \(m\)-th mode

- Actual minimisation implementation of the PGD

- No need to solve a new problem for any new parameter

- The online stage is a simple evaluation of the tensor decomposition

Neural Network PGD

Graphical implementation of Neural Network PGD

\[ \boldsymbol{u}\left(\textcolor{Blue}{\boldsymbol{x}}, \textcolor{LGreen}{\left\{\mu_i\right\}_{i \in \mathopen{~[\!\![~}1, \beta \mathclose{~]\!\!]}}}\right) = \sum\limits_{i=1}^m \textcolor{Blue}{\overline{\boldsymbol{u}}_i(\boldsymbol{x})} ~\textcolor{LGreen}{\prod_{j=1}^{\beta}\lambda_i^j(\mu^j)} \]

Interpretable NN-PGD

- No black box

- Fully interpretable implementation

- Great transfer learning capabilities

- Straightforward implementation

- Benefiting from current ML developments

- Straightforward definition of the physical loss using auto-differentiation

Stiffness and external forces parametrisation

Illustration of the surrogate model in use (Daby-Seesaram et al., 2025)

Parameters

- Parametrised stiffness \(E\)

- Parametrised external force \(\boldsymbol{f} = \begin{bmatrix} ~ \rho g \sin\left( \theta \right) \\ -\rho g \cos\left( \theta \right) \cos\left( \phi \right) \\ \rho g \cos\left( \theta \right) \sin\left( \phi \right) \end{bmatrix}\)

NN-PGD

- Immediate evaluation of the surrogate model (~100Hz)

- Straightforward differentiation capabilities regarding the input parameters

Convergence of the greedy algorithm

Loss convergence

Loss decay

Construction of the ROB

- New modes are added when the loss’s decay cancels out

- Adding a new mode gives extra latitude to improve predictivity

Accuracy with regards to FEM solutions

| Solution | Error |

|---|---|

\(E = 3.8 \times 10^{-3}\mathrm{ MPa}, \theta = 241^\circ\) |

\(E = 3.8 \times 10^{-3}\mathrm{ MPa}, \theta = 241^\circ\) |

\(E = 3.1 \times 10^{-3}\mathrm{ MPa}, \theta = 0^\circ\) |

\(E = 3.1 \times 10^{-3}\mathrm{ MPa}, \theta = 0^\circ\) |

| \(E\) (kPa) | \(\theta\) (rad) | Relative error |

|---|---|---|

| 3.80 | 1.57 | \(1.12 \times 10^{-3}\) |

| 3.80 | 4.21 | \(8.72 \times 10^{-4}\) |

| 3.14 | 0 | \(1.50 \times 10^{-3}\) |

| 4.09 | 3.70 | \(8.61 \times 10^{-3}\) |

| 4.09 | 3.13 | \(9.32 \times 10^{-3}\) |

| 4.62 | 0.82 | \(2.72 \times 10^{-3}\) |

| 5.01 | 2.26 | \(5.35 \times 10^{-3}\) |

| 6.75 | 5.45 | \(1.23 \times 10^{-3}\) |

Multi-level training

Strategy

- The interpretability of the NN allows

- Great transfer learning capabilities

- Start with coarse (cheap) training

- Followed by fine tuning, refining all modes

- Great transfer learning capabilities

- Extends the idea put forward by (Giacoma et al., 2015)

Note

- The structure of the Tensor decomposition is kept throughout the multi-level training

- Last mode of each level is irrelevant by construction

- It is removed before passing onto the next refinement level

III - Patient-specific parametrisation

|

|

Shape model - registration (Gangl et al., 2021).

Computing the mappings

- The objective is to find the domain \(\omega\) occupied by a given segmented shape in a 3D image

- Which is solution of

\[\omega = \arg \min\underbrace{ \int_{\omega} I\left( x \right) \mathrm{d}\omega}_{\Psi\left(\omega\right)}\]

With requirement that \(I\left( x \right) \begin{cases} < 0 \forall x \in \mathcal{S} \\ > 0 \forall x \not \in \mathcal{S} \end{cases}\), with \(\mathcal{S}\) the image footprint of the shape to be registered

The domain \(\omega\) can be described by mapping \[\Phi\left(X\right) = I_d + u\left(X\right)\] from a reference shape \(\Omega\), leading to \[\int_{\omega} I\left( x \right) \mathrm{d}\omega = \int_{\Omega} I \circ \Phi \left( X \right) J \mathrm{d}\Omega\]

Shape derivatives

- \(D\Psi\left(\omega\right)\left(\boldsymbol{u}^*\right) = \int_{\Omega} \nabla I \cdot u^* J \mathrm{d}\Omega + \underbrace{\int_{\Omega}I \circ \Phi\left(X\right) (F^T)^{-1} : \nabla u^* J \mathrm{d}\Omega}_{\int_{\omega}I\left(x\right) \mathrm{div}\left( u^* \circ \Phi \right) \mathrm{d}\omega}\)

Sobolev gradient (Neuberger, 1985; Neuberger, 1997)

\[D\Psi\left(u\right)\left(v^*\right) = \left(\nabla^H \Psi \left(u\right), v^* \right)_H\]

- Classically:

- \(\left(\boldsymbol{u}, \boldsymbol{v}^* \right)_H := \int_{\omega} \boldsymbol{\nabla_s}\boldsymbol{u}:\boldsymbol{\nabla_s}\boldsymbol{\boldsymbol{v}^*}\mathrm{d}\omega + \alpha \int_{\omega} \boldsymbol{u} \cdot \boldsymbol{v^*}\mathrm{d}\omega\)

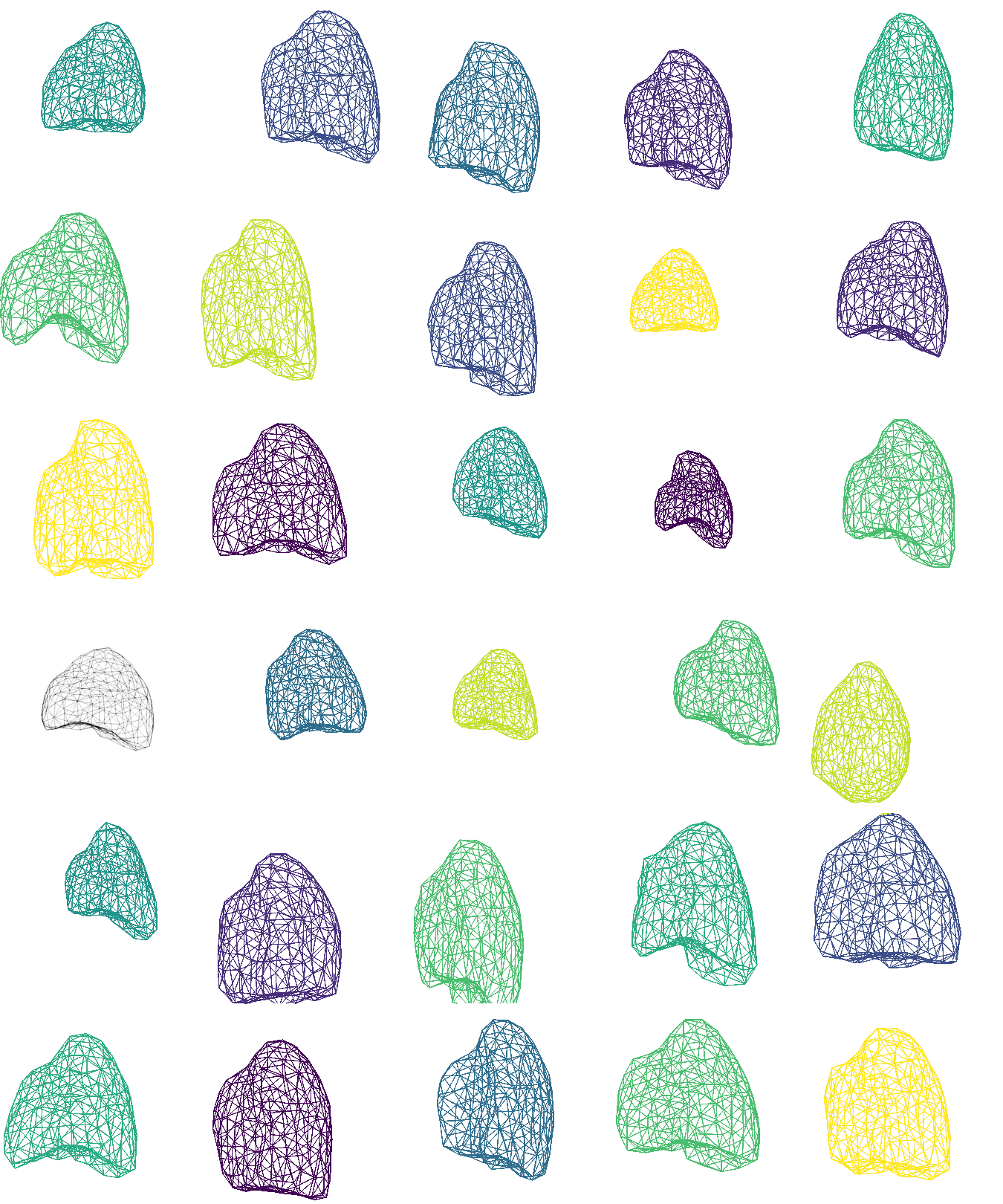

Shape model - parametrisation

Encoding the shape of the lung in a low-dimensional space

- In order to feed the surrogate model we need a parametrisation of the lung

- ROM on the shape mappings library

Conclusion

Conclusion

- Robust and straightforward general implementation of a NN-PGD

- Interpretable

- Benefits from all recent developments in machine learning

- Surrogate modelling of parametrised PDE solution

- Promising results on simple toy case

Perspectives for patient-specific applications

- Implementation of the poro-mechanics lung model (Patte et al., 2022)

- Further work on parametrising the geometries

- Inputting geometry parameters in the NN-PGD

- Error quantification on the estimated parameters

Technical perspectives

- Stochastic integration (curse of dimensionality for non-separable quantities)

- Non-linear reduced order modelling

- Do not hesitate to check the Github page